AI is here, and it brings with it a new landscape of potential innovation.

By: Vineet Tiruvadi (Founder, nForm, LLC), Marek Sirendi (Head of AI, Ascertain Systems, Inc.), Mark Michalski (CEO, Ascertain Systems, Inc.)

(view original article on Towards AI)

Driven largely by the power of the Transformer (Tf) architecture, large language models (LLMs) have brought AI to the forefront of public discussion and technological innovation [Vaswani 2023].

The surprising effectiveness of Tfs in generating human-sounding, plausible sentences has taken the world by surprise — bringing with it a fast flood of AI-powered products spanning every sector of service.

Some domains, like healthcare and defense, require capabilities beyond just plausible sentences — they require efficiency and efficacy in problem-solving. While transformers show impressive capability in language tasks, alternative architectures that trade-off expressivity for domain-specific efficiency and efficacy will become increasingly relevant.

One promising, and structurally interesting, alternative is the general class of State Space Models. The most popular amongst these in 2024 is Mamba, developed by Albert Gu and Tri Dao (Gu and Dao [2024]). Recent investigations have confirmed improved efficiency in SSMs, even finding explanations for improvements in both training and inference (Zucchet and Orvieto [2024]).

Seeing how states flow in their ambient space can give us a lot of information beyond sequence prediction. This depiction of the Lorenz Attractor shows how states can flow, yielding trajectories and measured sequences.

Seeing how states flow in their ambient space can give us a lot of information beyond sequence prediction. This depiction of the Lorenz Attractor shows how states can flow, yielding trajectories and measured sequences. Img source: https://www.linkedin.com/pulse/chaos-can-seductive-sabu-francis/

Uniquely, SSMs like Mamba may also enable improved reasoning — enabling more efficient and effective Agentic AI that executes interventions. Control Theory problems are, by nature, focused on optimizing intervention — and benchmarks that utilize them may provide a more direct assessment of an LLM’s problem-solving capabilities (Kevian et al. [2024]).

In this piece, we outline the differences between Transformer-based models (Tf) and State Space-inspired models (SSM). Starting with a brief historical context, we’ll outline key architectural highlights of SSM. We then benchmark efficiency and efficacy between SSM and Tf-based models, with a novel utilization of AgentBench and ControlBench in our assessment. We’ll leave with a brief discussion about the technical and product promise of SSMs, and the challenges to achieving that promise.

History and Motivation

While there are a lot of exciting advances in the LLM space today, SSMs have a unique advantage — they’ve got a history of utility that stretches back more than 50 years.

History

The concept of a state space has a rich history in fields outside of LLM or natural language processing. Originally proposed by Kalman in 1960 (Kalman [1960], Yamamoto [2019]), the state space approach brought an elegance to bear on previously challenging problems.

R E Kalman had quite a lifetime impact. https://www.eng.ufl.edu/newengineer/in-memoriam/remembering-rudolf-e-kalman-1930-2016

It did so by focusing less on understanding how measurements evolve, but rather to focus on how an underlying latent state evolves. Kalman’s influence on Control Theory was pervasive, with his state space framework enabling results in both inference and intervention (Antoulas [1991]).

Control Theory, a discipline of engineering, is focused on understanding and designing interventions in systems — with or without understanding Thomas et al. [2019]. The approaches in Control Theory have been developed over decades, with the recent progress in ML, AI, and compute galvanizing the stalled field. Modern efforts in Control Theory, like Koopman Operator theory and data-driven dynamics learning, are directly informed by SSMs and demonstrate promise for optimizing intervention even in systems with complex dynamics (Bevanda et al. [2021], Kaiser et al. [2018]).

![Figure 1: State Spaces vs Sequence Spaces. State Space Models are the foundation of many modern analytical techniques. By separating the state space from the measured/output sequences, we may better capture the dynamics underlying sentences. Image Source: Brunton [2019].](https://cdn.prod.website-files.com/682bb57f05a4cefe7a5c7a52/688b5bf316470ae5d9937165_1_xxiZ32cxq51TjcmN6SqllA.webp)

Figure 1: State Spaces vs Sequence Spaces. State Space Models are the foundation of many modern analytical techniques. By separating the state space from the measured/output sequences, we may better capture the dynamics underlying sentences. Image Source: Brunton [2019].

State space modeling has found significant utility outside of traditional Control Theory (Durbin and Koopman [2012]). The emphasis on capturing the state evolutions underlying observed trajectories, and not the trajectories directly, has yielded parsimonious models that bring prediction and interpretability. The idea that the evolution is reducible to a simple “dynamics” map led to more tractable solutions since they dissociated the measurements, and their independent dynamics, from the core task at hand: controlling states (Figure 1). While early, the use of state space models in healthcare has yielded exciting, effective insights (Heidari et al. [2024], Paninski et al. [2010]).

SSM integration into LLMs is more recent and at a shallower level than other domains (Gu and Dao [2024]). However, if the history of Control Theory is any indication, SSM may be the key ingredient to bringing parsimony and elegant reasoning to AI (Fahrmeir [undated]). In particular, with decision-making problems that map onto those already studied and solved via Control Theory.

Motivation

While Transformer-based models have demonstrated impressive performance in benchmarks, SSMs may directly address several of their main limitations.

Efficiency of Inference: Tf models exhibit the quadratic bottleneck by requiring communication between each pair of tokens. As applications demand larger context windows, Tf-based models yield rapidly diminishing efficiencies.

Explainability: By developing a deep statistical model of token-to-token relationships, the underlying reason for correlations remains hidden. This makes instructions arising from LLMs more correlational than causal with respect to reasoning chains.

Summarization: While Tf performance is high, their responses suffer from general errors and hallucinations suggestive of an inappropriate summarization — think averaging between apples and oranges. Forcing prediction through a single state space may yield a simpler latent map that better captures the problem underlying the text sequences carrying it.

Intervention: A major effort in AI research is focused on providing optimal interventions, beyond most-likely sentence completion. This is a surprisingly difficult challenge, and a central topic in the field of optimal control.

Architecture

Let’s understand, at the lowest level, how the parts of SSM differ from Tf.

Transformers (Tf)

Tf have a, now, well-studied architecture.

![Figure 2: Transformer architecture, where self-attention causes compute requirements to grow quadratically. Figure adapted from Vaswani et al. [2023].](https://cdn.prod.website-files.com/682bb57f05a4cefe7a5c7a52/688b5c064122c176c8650f00_1_QNrOyWQBJPw3-w6TUlPTRA.webp)

Figure 2: Transformer architecture, where self-attention causes compute requirements to grow quadratically. Figure adapted from Vaswani et al. [2023].

In a transformer, every token looks back at every previous token when making predictions. This requires computationally expensive matrix multiplication to be carried out during the inference of each subsequent token.

This pairwise communication means a forward pass is O(n2) time complexity in training (the dreaded quadratic bottleneck), and each new token generated autoregressively takes O(n) time. In other words, as the context size increases, the model gets slower.

Limitations of Transformers:

01. Quadratic Bottleneck: Transformers use a self-attention mechanism that allows every token to look at every other token in the sequence, leading to a quadratic increase in training time complexity for long sequences.

02. Memory Bottleneck: Storing information about all previous tokens requires a lot of memory, making it difficult to handle very long sequences.

03. Interpretability: Transformers have myriad parameters that are not readily interpretable. This limits the ability to debug and improve models rationally post-training.

State Space Models (SSMs)

State Space Models (SSMs) employ a Control Theory-inspired framework that focuses on relevant information from the past instead of attending to everything.

![Figure 3: Mamba Architecture more efficiently carries the sequences heretofore in a state that is then used for the next step sequence being inferred. Figure adapted from Gu and Dao [2024].](https://cdn.prod.website-files.com/682bb57f05a4cefe7a5c7a52/688b5c1779e932cadc70e835_1_FDk-fT4aSErGOQRijQgDhQ.webp)

Figure 3: Mamba Architecture more efficiently carries the sequences heretofore in a state that is then used for the next step sequence being inferred. Figure adapted from Gu and Dao [2024].

They do so by maintaining a state that captures the essential information about the past that is relevant for predicting the next element in the sequence.

State refers to the variables that govern how input variables are transformed over time, fully determining the future system behavior. In theory, once we have the state, there’s nothing else we need to know about the past to predict the future. Ideally, the state is a fairly small amount of information that captures the essential dynamics of the system. That is, the state in its state space fully compresses the past that occurred before it.

Mamba is one of the more popular implementations of SSM, alongside S4 and H3 Gu and Dao [2024], Gu et al. [2022]. By using selective state spaces, Mamba balances the benefits of SSM with the practical expectations of LLMs today. Its architecture addresses the limitations of Tf architecture in several ways:

01. Long-term Memory:Mamba can handle very long sequences because it focuses on the most relevant information and forgets less relevant details.

02. Effectiveness/Efficiency Trade-off:Transformers are more effective for short sequences due to their ability to attend to all information, but they become less efficient for longer sequences. Mamba offers a better balance between effectiveness and efficiency for long sequences, enabling longer, more detailed responses from LLMs.

03. Information Flow:Transformers rely on short-term memory (context window) while Mamba utilizes a “hard drive” like long-term memory (state).

04. Optimizes for Optimizers:One recent result explains SSM efficacy and efficiency in terms of diagonalizing the underlying inference (Zucchet and Orvieto [2024]).

Benchmarking

Efficiency in terms of compute resources becomes critical at scale and for edge applications, while efficacy in instruction-following and problem solving is crucial for AI decision-making and planning.

Preliminary benchmarks in the literature are demonstrating Mamba’s improved performance (Xu [2024], Waleffe et al. [2024]), but more systematic benchmarking is needed to better understand the capabilities and limitations of the architecture.

To compare SSM with Tf architectures, we benchmarked efficiency and efficacy, with efficacy defined in two ways:

01. Agentic capabilities as determined through AgentBench.

02. Reasoning as determined through ControlBench.

Efficiency

The Mamba architecture has a smaller footprint (Figure 3 vs 2) and lower compute requirements by design. A prior, we expect SSMs to be more efficient.

Preliminary benchmarks confirm this efficiency (Gu and Dao [2024], Xu [2024], Figure 4). Inference-time throughput of Mamba demonstrated significant improvement over Tf (Figure 4).

![Figure 4: SSMs are likely to be more performant than transformers. a) Beyond the core architectural differences, SSMs have optimizations that can yield uniformly improved training efficiency. b) The throughput at inference time can achieve significant gains over a Tf, as expected given the streamlined architecture. Figure adapted from Gu and Dao [2024].](https://cdn.prod.website-files.com/682bb57f05a4cefe7a5c7a52/688b5c33f7a7da91c5a5e34b_1_B1y8zblonFiNk3VP6nMvGg.webp)

Figure 4: SSMs are likely to be more performant than transformers. a) Beyond the core architectural differences, SSMs have optimizations that can yield uniformly improved training efficiency. b) The throughput at inference time can achieve significant gains over a Tf, as expected given the streamlined architecture. Figure adapted from Gu and Dao [2024].

This translates to quicker responses in user-facing applications and more natural interactions provided the underlying hardware libraries are suitably optimized.

Efficacy: Instruction Following

Efficacy of LLMs is a critical component in any application — does the LLM actually generate something useful towards some goal? This is particularly important in Agent-Based AI, where outputs are chained together and errors can compound (Liu et al. [2023]).

A first test of efficacy is instruction following — how well does Mamba perform in the database section of AgentBench?

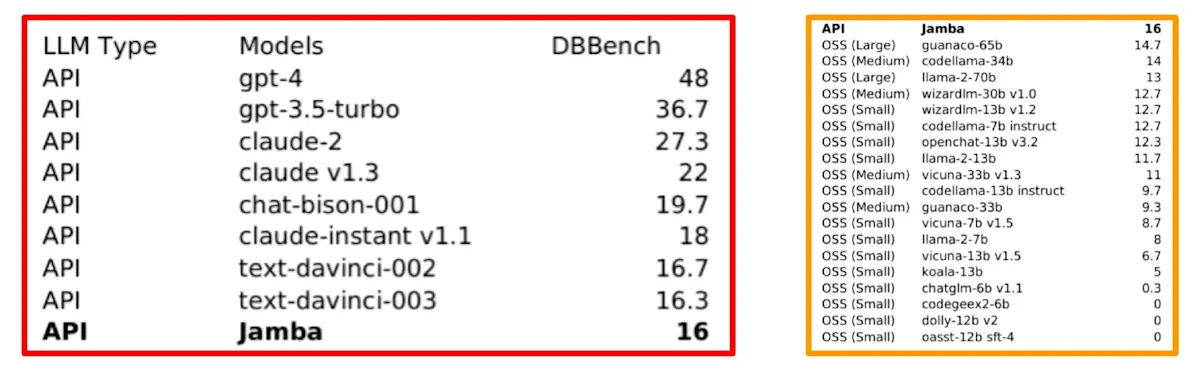

Figure 5: DBBench Results. a) Jamba, a Tf/SSM hybrid model, performed at the bottom of the API list. b) Jamba performed better than all tested open-source models.

Looking at Mamba in DBBench, we see poor performance (Figure 5), likely as a result of fine-tuning towards assistant-level performance and away from agent-level uses. This makes it clear that publicly available Mamba LLMs are currently insufficient for Agentic workflows without additional configuration.

We also assessed efficacy of SSM indirectly through a hybrid SSM/Tf model called Jamba Lieber et al. [2024]. Jamba performed at the bottom of the API list (Table 1a) and towards the top of the open-source LLM list (Table 1b). Given the relative lack of optimizations performed for SSM, we interpret this result as a promising starting point for SSM.

Efficacy: Problem Solving

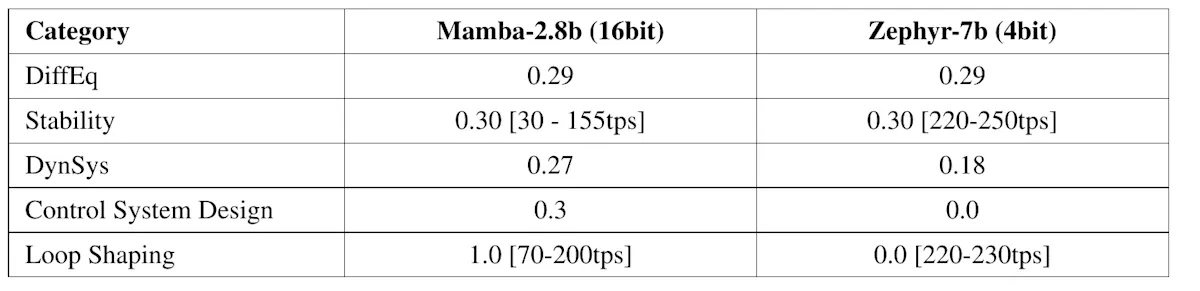

To compare effectiveness in problem solving, we used ControlBench (Kevian et al. [2024]). This benchmark uses classic problems in Control Theory to assess particular types of problem solving.

We found that SSM, while still new and relatively underoptimized compared to Transformers, achieve middle-of-the-pack performance in inference time tokens-per-second (Table 1).

One major challenge in directly comparing SSM to Tf is the lack of standardization in AI model benchmarking efforts. Direct comparison of Mamba with Tf architectures is desirable, but requires further standardization of benchmarking tools.

Our goal here was to isolate the contributions of the architecture, but several major challenges were present. Importantly, we actively ignored models that achieve higher performance (Figure 5) due the the presence of other, opaque components beyond the core LLM — this confounded our goal of comparing SSM to Tf. LLMs require careful fine-tuning to achieve the level of precision and robustness needed in agentic pipelines — and this careful fine-tuning is outside of the scope of our efforts here.

Ultimately, an empirical understanding of out-of-the-box performance can help determine the best architecture for product R&D, and our efforts aim to push us in that direction.

Contrast and Context

So how do SSMs contrast with Tf in the technical and product domains? What are we to take away from this, and other, experiments with SSMs?

Technical Considerations

SSMs are exciting for various technical reasons, and their streamlined architecture already demonstrates improvements in standard efficiency benchmarks Gu and Dao [2024]. While transformers excel at sentence completion, they are not very efficient, especially as context grows. Because they store everything from their past, their requirements grow rapidly — the quadratic bottleneck Keles et al. [2022].

Unlike Transformers, RNNs forget a lot of information, only recalling a small amount in their hidden state and discarding the rest. They are very efficient in that the map they’re carrying for prediction, in the state space, is small. Yet they are less effective as discarded information cannot be recovered. Mamba lies in the middle and attempts to capture the best of both worlds. More generally, SSMs provide a powerful alternative architecture to the transformer that carries along a summary state of the previous sequences.

Major technical challenges exist with SSMs, as we can see in our benchmarks of Mamba. One of the biggest hurdles is the lack of optimization in the SSM space compared to the Tf space. Another challenge is the potentially critical loss of expressivity — SSMs may be more appropriate for narrow-task situations and not for current efforts to generalize.

Product Implications

Real-world products operate in resource constrained, time-sensitive environments — Tf-based LLMs may not scale feasibly in the consumer space. SSMs provide a potential alternative for resource-constrained situations that may complement larger, Tf-based models.

Beyond this efficiency improvement, the ability to guide and shape SSM summarization may yield more interpretable and tunable models. In the setting of healthcare, this can translate directly to improved reliability and robustness, especially as a growing effort to build systems models in biology continues (Angarita-Rodríguez et al. [2023]).

Swarm of Ants working efficiently towards… something?

As agent-based AI grows, the ability of LLM agents to reason between words and actions will translate to a competitive edge in products, and SSMs that prioritize interpretability of their latent space mappings may enable more rational improvements to models and end-to-end performance.

Final Thoughts

In this post, we highlighted aspects of state space models (SSM) by comparing them to Transformers (Tf), and highlighting their long history and track record of impact. Their efficiencies may be crucial for agentic AI to be rolled out at scale and contribute to meaningful gains in productivity.

Our AgentBench testing of Mamba demonstrated poor-instruction following, likely because SSM-based models have not yet been fine-tuned broadly enough. We strongly urge the community to prioritize SSM for intruction-following, as agentic AI may be a natural domain for SSMs to excel.

Our ControlBench testing of Mamba demonstrated improved complex problem solving, though comparison against a Tf-based model with more parity remains needed. These preliminary results are promising, and further study of the hypothesis that the selective state space mechanism drives this improved performance is needed.

Beyond the benchmarks, we speculate that Mamba’s SSM approach opens up a wide range of interesting possibilities. In combination with other intelligence focused benchmarks (Chollet [2024]), ControlBench may better assess the higher-order capabilities we expect in AI systems. Perhaps integration of non-language state space models from domain-specific research becomes possible in SSM-powered LLMs, providing an alternative approach to reasoning that leverages the rich prior work informing real-world decision-making already. This must be studied carefully and transparently, as some critical investigations of SSMs find hints of intrinsic weaknesses (Merrill et al. [2024]).

While we’re still early in this latest resurgence of SSMs, and indeed in the LLM story more broadly, the unique architecture of SSMs are showing unique capabilities that will be of great interest to impactful AI products spanning all domains.

SpaceOdyssey.webp)